Where Every Scroll is a New Adventure

Neural Networks - Blog Posts

My new experiments with neural networks. I made fun of my avatar again :)

If you are interested, the details are on my blog site: www.andarky.site/novye-opyty-s-nejrosetjami

You have just found Keras. #Keras is a minimalist, highly modular #neural #network library in the spirit of Torch, written in #Python / Keras: Theano-based Deep Learning library

Fast Deep Learning Prototyping for Python [reddit]

submitted by mignonmazion [link] [comment] [ link ]

Paint colors designed by neural network, Part 2

So it turns out you can train a neural network to generate paint colors if you give it a list of 7,700 Sherwin-Williams paint colors as input. How a neural network basically works is it looks at a set of data - in this case, a long list of Sherwin-Williams paint color names and RGB (red, green, blue) numbers that represent the color - and it tries to form its own rules about how to generate more data like it.

Last time I reported results that were, well… mixed. The neural network produced colors, all right, but it hadn’t gotten the hang of producing appealing names to go with them - instead producing names like Rose Hork, Stanky Bean, and Turdly. It also had trouble matching names to colors, and would often produce an “Ice Gray” that was a mustard yellow, for example, or a “Ferry Purple” that was decidedly brown.

These were not great names.

There are lots of things that affect how well the algorithm does, however.

One simple change turns out to be the “temperature” (think: creativity) variable, which adjusts whether the neural network always picks the most likely next character as it’s generating text, or whether it will go with something farther down the list. I had the temperature originally set pretty high, but it turns out that when I turn it down ever so slightly, the algorithm does a lot better. Not only do the names better match the colors, but it begins to reproduce color gradients that must have been in the original dataset all along. Colors tend to be grouped together in these gradients, so it shifts gradually from greens to browns to blues to yellows, etc. and does eventually cover the rainbow, not just beige.

Apparently it was trying to give me better results, but I kept screwing it up.

Raw output from RGB neural net, now less-annoyed by my temperature setting

People also sent in suggestions on how to improve the algorithm. One of the most-frequent was to try a different way of representing color - it turns out that RGB (with a single color represented by the amount of Red, Green, and Blue in it) isn’t very well matched to the way human eyes perceive color.

These are some results from a different color representation, known as HSV. In HSV representation, a single color is represented by three numbers like in RGB, but this time they stand for Hue, Saturation, and Value. You can think of the Hue number as representing the color, Saturation as representing how intense (vs gray) the color is, and Value as representing the brightness. Other than the way of representing the color, everything else about the dataset and the neural network are the same. (char-rnn, 512 neurons and 2 layers, dropout 0.8, 50 epochs)

Raw output from HSV neural net:

And here are some results from a third color representation, known as LAB. In this color space, the first number stands for lightness, the second number stands for the amount of green vs red, and the third number stands for the the amount of blue vs yellow.

Raw output from LAB neural net:

It turns out that the color representation doesn’t make a very big difference in how good the results are (at least as far as I can tell with my very simple experiment). RGB seems to be surprisingly the best able to reproduce the gradients from the original dataset - maybe it’s more resistant to disruption when the temperature setting introduces randomness.

And the color names are pretty bad, no matter how the colors themselves are represented.

However, a blog reader compiled this dataset, which has paint colors from other companies such as Behr and Benjamin Moore, as well as a bunch of user-submitted colors from a big XKCD survey. He also changed all the names to lowercase, so the neural network wouldn’t have to learn two versions of each letter.

And the results were… surprisingly good. Pretty much every name was a plausible match to its color (even if it wasn’t a plausible color you’d find in the paint store). The answer seems to be, as it often is for neural networks: more data.

Raw output using The Big RGB Dataset:

I leave you with the Hall of Fame:

RGB:

HSV:

LAB:

Big RGB dataset:

A neural network invents diseases you don’t want to get

Science fiction writers and producers of TV medical dramas: have you ever needed to invent a serious-sounding disease whose symptoms, progression, and cure you can utterly control? Artificial intelligence can help!

Blog reader Kate very kindly compiled a list of 3,765 common names for conditions from this site, and I gave them to an open-source machine learning algorithm called a recursive neural network, which learns to imitate its training data. Given enough examples of real-world diseases, a neural network should be able to invent enough plausible-sounding syndromes to satisfy any hypochondriac.

Early on in the training, the neural network was producing what were identifiably diseases, but probably wouldn’t fly in a medical drama. “I’m so sorry. You have… poison poison tishues.”

Much Esophageal Eneetems Vomania Poisonicteria Disease Eleumathromass Sexurasoma Ear Allergic Antibody Insect Sculs Poison Poison Tishues Complex Disease

As the training got going, the neural network began to learn to replicate more of the real diseases - lots of ventricular syndromes, for example. But the made-up diseases still weren’t too convincing, and maybe even didn’t sound like diseases at all. (Except for RIP Syndrome. I’d take that one seriously)

Seal Breath Tossy Blanter Cancer of Cancer Bull Cancer Spisease Lentford Foot Machosaver RIP Syndrome

The neural network eventually progressed to a stage where it was producing diseases of a few basic varieties :

First kind of disease: This isn’t really a disease. The neural network has just kind of named a body part, or a couple of really generic disease-y words. Pro writer tip: don’t use these in your medical drama.

Fevers Heading Disorder Rashimia Causes Wound Eye Cysts of the Biles Swollen Inflammation Ear Strained Lesions Sleepys Lower Right Abdomen Degeneration Disease Cancer of the Diabetes

Second kind of disease: This disease doesn’t exist, and sounds reasonably convincing to me, though it would probably have a different effect on someone with actual medical training.

Esophagia Pancreation Vertical Hemoglobin Fever Facial Agoricosis Verticular Pasocapheration Syndrome Agpentive Colon Strecting Dissection of the Breath Bacterial Fradular Syndrome Milk Tomosis Lemopherapathy Osteomaroxism Lower Veminary Hypertension Deficiency Palencervictivitis Asthodepic Fever Hurtical Electrochondropathy Loss Of Consufficiency Parpoxitis Metatoglasty Fumple Chronosis Omblex's Hemopheritis Mardial Denection Pemphadema Joint Pseudomalabia Gumpetic Surpical Escesion Pholocromagea Helritis and Flatelet’s Ear Asteophyterediomentricular Aneurysm

Third kind of disease: Sounds both highly implausible but also pretty darn serious. I’d definitely get that looked at.

Ear Poop Orgly Disease Cussitis Occult Finger Fallblading Ankle Bladders Fungle Pain Cold Gloating Twengies Loon Eye Catdullitis Black Bote Headache Excessive Woot Sweating Teenagerna Vain Syndrome Defentious Disorders Punglnormning Cell Conduction Hammon Expressive Foot Liver Bits Clob Sweating,Sweating,Excessive Balloblammus Metal Ringworm Eye Stools Hoot Injury Hoin and Sponster Teenager’s Diarey Eat Cancer Cancer of the Cancer Horse Stools Cold Glock Allergy Herpangitis Flautomen Teenagees Testicle Behavior Spleen Sink Eye Stots Floot Assection Wamble Submoration Super Syndrome Low Life Fish Poisoning Stumm Complication Cat Heat Ovarian Pancreas 8 Poop Cancer Of Hydrogen Bingplarin Disease Stress Firgers Causes of the ladder Exposure Hop D Treat Decease

Diseases of the fourth kind: These are the, um, reproductive-related diseases. And those that contain unprintable four-letter words. They usually sound ludicrous, and entirely uncomfortable, all at the same time. And I really don’t want to print them here. However! If you are in possession of a sense of humor and an email address, you can let me know here and I’ll send them to you.

A neural network invents some pies

(Pie -> cat courtesy of https://affinelayer.com/pixsrv/ )

I work with neural networks, which are a type of machine learning computer program that learn by looking at examples. They’re used for all sorts of serious applications, like facial recognition and ad targeting and language translation. I, however, give them silly datasets and ask them to do their best.

So, for my latest experiment, I collected the titles of 2237 sweet and savory pie recipes from a variety of sources including Wikipedia and David Shields. I simply gave them to a neural network with no explanation (I never give it an explanation) and asked it to try to generate more.

Its very first attempt left something to be desired, but it had figured out that "P”, “i”, and “e” were important somehow.

e Piee i m t iee ic ic Pa ePeeetae a e eee ema iPPeaia eieer i i i ie e eciie Pe eaei a

Second checkpoint. Progress: Pie.

Pie Pee Pie Pimi Pie Pim Cue Pie Pie (er Wie Pae Pim Piu Pie Pim Piea Cre Pia Pie Pim Pim Pie Pie Piee Pie Piee

This is expected, since the word “pie” is both simple and by far the most common word in the dataset. It stays in the stage above for rather a while, able to spell only “Pie” and nothing else. It’s like evolution trying to get past the single-celled organism stage. After 4x more time has elapsed, it finally adds a few more words: “apple”, “cream”, and “tart”. Then, at the sixth checkpoint, “pecan”.

Seventh checkpoint: These are definitely pies. We are still working on spelling “strawberry”, however.

Boatin Batan Pie Shrawberry Pie With An Cream Pie Cream Pie Sweesh Pie Ipple Pie Wrasle Cream Pie Swrawberry Pie Cream Pie Sae Fart Tart Cheem Pie Sprawberry Cream Pie Cream Pie

10th checkpoint. Still working.

Coscard Pie Tluste Trenss Pie Wot Flustickann Fart Oag’s Apple Pie Daush Flumberry O Cheesaliane Rutter Chocklnd Apple Rhupperry pie Flonberry Peran Pie Blumbberry Cream Pie Futters Whabarb Wottiry Rasty Pasty Kamphible Idponsible Swarlot Cream Cream Cront

16th checkpoint. Showing some signs of improvement? Maybe. It thinks Qtrupberscotch is a thing.

Buttermitk Tlreed whonkie Pie Spiatake Bog Pastry Taco Custard Pie Apple Pie With Pharf Calamed apple Freech Fodge Cranberry Rars Farb Fart Feep-Lisf Pie With Qpecisn-3rnemerry Fluit Turd Turbyy Raisin Pie Forp Damelnut Pie Flazed Berry Pie Figi’s Chicken Sugar Pie Sauce and Butterm’s Spustacian Pie Fill Pie With Boubber Pie Bok Pie Booble Rurble Shepherd’s Parfate Ner with Cocoatu Vnd Pie Iiakiay Coconate Meringue Pie With Spiced Qtrupberscotch Apple Pie Bustard Chiffon Pie

Finally we arrive at what, according to the neural network, is Peak Pie. It tracks its own progress by testing itself against the original dataset and scoring itself, and here is where it thinks it did the best.

It did in fact come up with some that might actually work, in a ridiculously-decadent sort of way.

Baked Cream Puff Cake Four Cream Pie Reese’s Pecan Pie Fried Cream Pies Eggnog Peach Pie #2 Fried Pumpkin Pie Whopper pie Rice Krispie-Chiffon Pie Apple Pie With Fudge Treats Marshmallow Squash Pie Pumpkin Pie with Caramelized Pie Butter Pie

But these don’t sound very good actually.

Strawberry Ham Pie Vegetable Pecan Pie Turd Apple Pie Fillings Pin Truffle Pie Fail Crunch Pie Crust Turf Crust Pot Beep Pies Crust Florid Pumpkin Pie Meat-de-Topping Parades Or Meat Pies Or Cake #1 Milk Harvest Apple Pie Ice Finger Sugar Pie Amazon Apple Pie Prize Wool Pie Snood Pie Turkey Cinnamon Almond-Pumpkin Pie With Fingermilk Pumpkin Pie With Cheddar Cookie Fish Strawberry Pie Butterscotch Bean Pie Impossible Maple Spinach Apple Pie Strawberry-Onions Marshmallow Cracker Pie Filling Caribou Meringue Pie

And I have no what these are:

Stramberiy Cheese Pie The pon Pie Dississippi Mish Boopie Crust Liger Strudel Free pie Sneak Pie Tear pie Basic France Pie Baked Trance pie Shepherd’s Finger Tart Buster’s Fib Lemon Pie Worf Butterscotch Pie Scent Whoopie Grand Prize Winning I*iple Cromberry Yas Law-Ox Strudel Surf Pie, Blue Ulter Pie - Pitzon’s Flangerson’s Blusty Tart Fresh Pour Pie Mur’s Tartless Tart

More of the neural network’s attempts to understand what humans like to eat:

Perhaps my favorite: Small Sandwiches

All my other neural network recipe experiments here.

Want more than that? I’ve got a bunch more recipes that I couldn’t fit in this post. Enter your email here and I’ll send you 38 more selected recipes.

Want to help with neural network experiments? For NaNoWriMo I’m crowdsourcing a dataset of novel first lines, after the neural network had trouble with a too-small dataset. Go to this form (no email necessary) and enter the first line of your novel, or your favorite novel, or of every novel on your bookshelf. You can enter as many as you like. At the end of the month, I’ll hopefully have enough sentences to give this another try.

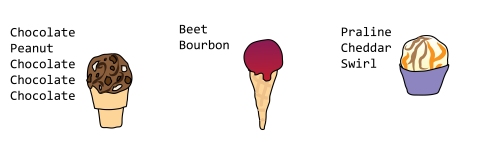

Generated ice cream flavors: now it’s my turn

Last week, I featured new ice cream flavors generated by Ms. Johnson’s coding classes at Kealing Middle School in Austin, Texas. Their flavors were good - much better than mine, in fact. In part, this was because they had collected a much larger dataset than I had, and in part this was because they hadn’t accidentally mixed the dataset with metal bands.

(the three at the bottom were mine)

But not only are Ms. Johnson’s coding class adept with textgenrnn, they’re also generous - and they kindly gave me their dataset of 1,600 ice cream flavors. They wanted to see what I would come up with.

So, I fired up char-rnn, a neural network framework I’ve used for a lot of my text-generating experiments - one that starts from scratch each time, with no memory of its previous dataset. There was no chance of getting metal band names in my ice cream this time.

But even so, I ended up with some rather edgy-sounding flavors. There was a flavor in the input dataset called Death by Chocolate, and I blame blood oranges for some of the rest, but “nose” was nowhere in the input, candied or otherwise. Nor was “turd”, for that matter. Ice cream places are getting edgy these days, but not THAT edgy.

Bloodie Chunk Death Bean Goat Cookie Peanut Bat Bubblegum Cheesecake Rawe Blueberry Fist Candied Nose Creme die Mucki Ant Cone Apple Pistachio mouth Chocolate Moose Mange Dime Oil Live Cookie Bubblegum Chocolate Basil Aspresso Lime Pig Beet Bats Blood Sundae Elterfhawe Monkey But Kaharon Chocolate Mouse Gun Gu Creamie Turd

Not all the flavors were awful, though. The neural network actually did a decent job of coming up with trendy-sounding ice cream flavors. I haven’t seen these before, but I wouldn’t be entirely surprised if I did someday.

Smoked Butter Lemon-Oreo Bourbon Oil Strawberry Churro Roasted Beet Pecans Cherry Chai Grazed Oil Green Tea Coconut Root Beet Peaches Malted Black Madnesss Chocolate With Ginger Lime and Oreo Pumpkin Pomegranate Chocolate Bar Smoked Cocoa Nibe Carrot Beer Red Honey Candied Butter Lime Cardamom Potato Chocolate Roasted Praline Cheddar Swirl Toasted Basil Burnt Basil Beet Bourbon Black Corn Chocolate Oreo Oil + Toffee Milky Ginger Chocolate Peppercorn Cookies & Oreo Caramel Chocolate Toasted Strawberry Mountain Fig n Strawberry Twist Chocolate Chocolate Chocolate Chocolate Road Chocolate Peanut Chocolate Chocolate Chocolate Japanese Cookies n'Cream with Roasted Strawberry Coconut

These next flavors seem unlikely, however.

Mann Beans Cherry Law Rhubarb Cram Spocky Parstita Green Tea Cogbat Cheesecake With Bear Peanut Butter Cookies nut Butter Brece Toasterbrain Blueberry Rose The Gone Butter Fish Fleek Red Vanill Mounds of Jay Roasted Monster Dream Sweet Chocolate Mouse Cookies nutur Coconut Chocolate Fish Froggtow Tie Pond Cookies naw Mocoa Pistachoopie Garl And Cookie Doug Burble With Berry Cake Peachy Bunch Kissionfruit Bearhounds Gropky Pum Stuck Brownie Vanilla Salted Blueberry Bumpa Thyme Mountain Bluckled Bananas Lemon-Blueberry Almernuts Gone Cream with Rap Chocolate Cocoa Named Honey

For the heck of it, I also used textgenrnn to generate some more ice creams mixed with metal bands, this time on purpose.

Swirl of Hell Person Cream Dead Cherry Tear Nightham Toffee

For the rest of these, including the not-quite-PG flavors, enter your email here.

A researcher wrote about why neural networks like picdescbot hallucinate so many sheep – and yet will miss a sheep right in front of them if it’s in an unusual context. Enjoy!

(Fig.1. Neuron connections in biological neural networks. Source: MIPT press office)

Physicists build “electronic synapses” for neural networks

A team of scientists from the Moscow Institute of Physics and Technology(MIPT) have created prototypes of “electronic synapses” based on ultra-thin films of hafnium oxide (HfO2). These prototypes could potentially be used in fundamentally new computing systems. The paper has been published in the journal Nanoscale Research Letters.

The group of researchers from MIPT have made HfO2-based memristors measuring just 40x40 nm2. The nanostructures they built exhibit properties similar to biological synapses. Using newly developed technology, the memristors were integrated in matrices: in the future this technology may be used to design computers that function similar to biological neural networks.

Memristors (resistors with memory) are devices that are able to change their state (conductivity) depending on the charge passing through them, and they therefore have a memory of their “history”. In this study, the scientists used devices based on thin-film hafnium oxide, a material that is already used in the production of modern processors. This means that this new lab technology could, if required, easily be used in industrial processes.

“In a simpler version, memristors are promising binary non-volatile memory cells, in which information is written by switching the electric resistance – from high to low and back again. What we are trying to demonstrate are much more complex functions of memristors – that they behave similar to biological synapses,” said Yury Matveyev, the corresponding author of the paper, and senior researcher of MIPT’s Laboratory of Functional Materials and Devices for Nanoelectronics, commenting on the study.

Synapses – the key to learning and memory

A synapse is point of connection between neurons, the main function of which is to transmit a signal (a spike – a particular type of signal, see fig. 2) from one neuron to another. Each neuron may have thousands of synapses, i.e. connect with a large number of other neurons. This means that information can be processed in parallel, rather than sequentially (as in modern computers). This is the reason why “living” neural networks are so immensely effective both in terms of speed and energy consumption in solving large range of tasks, such as image / voice recognition, etc.

(Fig.2 The type of electrical signal transmitted by neurons (a “spike”). The red lines are various other biological signals, the black line is the averaged signal. Source: MIPT press office)

Over time, synapses may change their “weight”, i.e. their ability to transmit a signal. This property is believed to be the key to understanding the learning and memory functions of thebrain.

From the physical point of view, synaptic “memory” and “learning” in the brain can be interpreted as follows: the neural connection possesses a certain “conductivity”, which is determined by the previous “history” of signals that have passed through the connection. If a synapse transmits a signal from one neuron to another, we can say that it has high “conductivity”, and if it does not, we say it has low “conductivity”. However, synapses do not simply function in on/off mode; they can have any intermediate “weight” (intermediate conductivity value). Accordingly, if we want to simulate them using certain devices, these devices will also have to have analogous characteristics.

The memristor as an analogue of the synapse

As in a biological synapse, the value of the electrical conductivity of a memristor is the result of its previous “life” – from the moment it was made.

There is a number of physical effects that can be exploited to design memristors. In this study, the authors used devices based on ultrathin-film hafnium oxide, which exhibit the effect of soft (reversible) electrical breakdown under an applied external electric field. Most often, these devices use only two different states encoding logic zero and one. However, in order to simulate biological synapses, a continuous spectrum of conductivities had to be used in the devices.

“The detailed physical mechanism behind the function of the memristors in question is still debated. However, the qualitative model is as follows: in the metal–ultrathin oxide–metal structure, charged point defects, such as vacancies of oxygen atoms, are formed and move around in the oxide layer when exposed to an electric field. It is these defects that are responsible for the reversible change in the conductivity of the oxide layer,” says the co-author of the paper and researcher of MIPT’s Laboratory of Functional Materials and Devices for Nanoelectronics, Sergey Zakharchenko.

The authors used the newly developed “analogue” memristors to model various learning mechanisms (“plasticity”) of biological synapses. In particular, this involved functions such as long-term potentiation (LTP) or long-term depression (LTD) of a connection between two neurons. It is generally accepted that these functions are the underlying mechanisms of memory in the brain.

The authors also succeeded in demonstrating a more complex mechanism – spike-timing-dependent plasticity, i.e. the dependence of the value of the connection between neurons on the relative time taken for them to be “triggered”. It had previously been shown that this mechanism is responsible for associative learning – the ability of the brain to find connections between different events.

To demonstrate this function in their memristor devices, the authors purposefully used an electric signal which reproduced, as far as possible, the signals in living neurons, and they obtained a dependency very similar to those observed in living synapses (see fig. 3).

(Fig.3. The change in conductivity of memristors depending on the temporal separation between “spikes”(rigth) and the change in potential of the neuron connections in biological neural networks. Source: MIPT press office)

These results allowed the authors to confirm that the elements that they had developed could be considered a prototype of the “electronic synapse”, which could be used as a basis for the hardware implementation of artificial neural networks.

“We have created a baseline matrix of nanoscale memristors demonstrating the properties of biological synapses. Thanks to this research, we are now one step closer to building an artificial neural network. It may only be the very simplest of networks, but it is nevertheless a hardware prototype,” said the head of MIPT’s Laboratory of Functional Materials and Devices for Nanoelectronics, Andrey Zenkevich.

Awesome things you can do (or learn) through TensorFlow. From the site:

A Neural Network Playground

Um, What Is a Neural Network?

It’s a technique for building a computer program that learns from data. It is based very loosely on how we think the human brain works. First, a collection of software “neurons” are created and connected together, allowing them to send messages to each other. Next, the network is asked to solve a problem, which it attempts to do over and over, each time strengthening the connections that lead to success and diminishing those that lead to failure. For a more detailed introduction to neural networks, Michael Nielsen’s Neural Networks and Deep Learning is a good place to start. For more a more technical overview, try Deep Learning by Ian Goodfellow, Yoshua Bengio, and Aaron Courville.

GitHub

h-t FlowingData

A few of these I can elaborate on:

Astro Robin Hood is an obscure JRPG with a "Robin Hood IN SPACE" theme. Like many RPGs of the era, it's mostly a Dragon Quest clone, but you can a build a party of out several available Merry Men.

Star Trek - The Atlantis Bone is a Japan-exclusive Star Trek game, framed as if it were an episode of the Original Series. Some claim the story comes from an unfilmed TV script for the show, but this has never been confirmed. Japanese fans say it represents the show better than many of the other Star Trek games of the era.

Insection - The Arcade Game is based on a bug-themed space shooter made by an obscure European dev. Oddly, the actual arcade game was never finished or released, as the games were developed concurrently and the arcade version was canceled due to financial issues.

Metal Fighter Blaseball - An oddly misspelled baseball game with a sci-fi theme, similar to Base Wars. Some cute sprites based on tokusatsu characters and aliens, but otherwise a pretty standard baseball game for the era.

Nigel Mansell’s Font Fighting - Japan-exclusive "action education game" meant to teach kids English and to improve handwriting. Borderline unplayable. No is sure who Nigel Mansell is. EDIT: While some assume the title refers to the race car driver Nigel Mansell, the game doesn’t feature driving a tall, nor does it have Mansell’s likeness, so your guess is as a good as anyone’s.

Fourteen obscure NES/Famicom ROMs that were never released in North America, according to a neural network:

Power Punker (Europe)

Business Gaiden (Japan)

Astro Robin Hood (Japan)

Entity Rad (Europe)

World Championship Shting (Japan)

Star Trek - The Atlantis Bone (Japan)

Insection - The Arcade Game (Europe)

Captain Player Earth (Japan)

Magic Dark Star Hen (Japan)

Murde - The Fingler’s Quest (Europe)

Metal Fighter Blaseball (Japan) (Rev A)

Smurf the Edify (Japan)

Skate or Space Dive Bashboles (Europe)

Chack'van, Ultimate Game of Power Blam (Japan)

Nigel Mansell’s Font Fighting (Japan)

Video game titles created by a neural network trained on 146,000 games:

Conquestress (1981, Data East) (Arcade)

Deep Golf (1985, Siny Computer Entertainment) (MS-DOS)

Brain Robot Slam (1984, Gremlin Graphics) (Apple IIe)

King of Death 2: The Search of the Dog Space (2010, Capcom;Br�derbund Studios) (Windows)

Babble Imperium (1984, Paradox Interactive) (ZX Spectrum)

High Episode 2: Ghost Band (1984, Melbourne Team) (Apple IIe)

Spork Demo (?, ?) (VIC-20)

Alien Pro Baseball (1989, Square Enix) (Arcade)

Black Mario (1983, Softsice) (Linux/Unix)

Jort: The Shorching (1991, Destomat) (NES)

Battle for the Art of the Coast (1997, Jaleco) (GBC)

Soccer Dragon (1987, Ange Software) (Amstrad CPC)

Mutant Tycoon (2000, Konami) (GBC)

Bishoujo no Manager (2003, author) (Linux/Unix)

Macross Army (Defenders Ball House 2: League Alien) (1991, Bandai) (NES)

The Lost of the Sand Trades 2000 (1990, Sega) (SNES)

Pal Defense (1987, author) (Mac)

(part one, part two)

ganbred swimming sea slug

ganbred amoeboid

ganbred grumpyteuthis, or goblin octopus

ganbred cephalopod, probably

ganbred carrot-in-a-balloon worm

new ideas for lamps in parks

ganbred sun jellyfish

ganbred ghost mask fish

GANbreeder bubble

GANbreeder jellyfish crossbreeding

crossbreeding different but pretty similar GANbreeder results has its uses

Sloppy Demon Collection Fall 2018

extremely haunted sock puppets

Denim Spirit at level 1, 5 and 20